MOXF Effects

Tagged Under

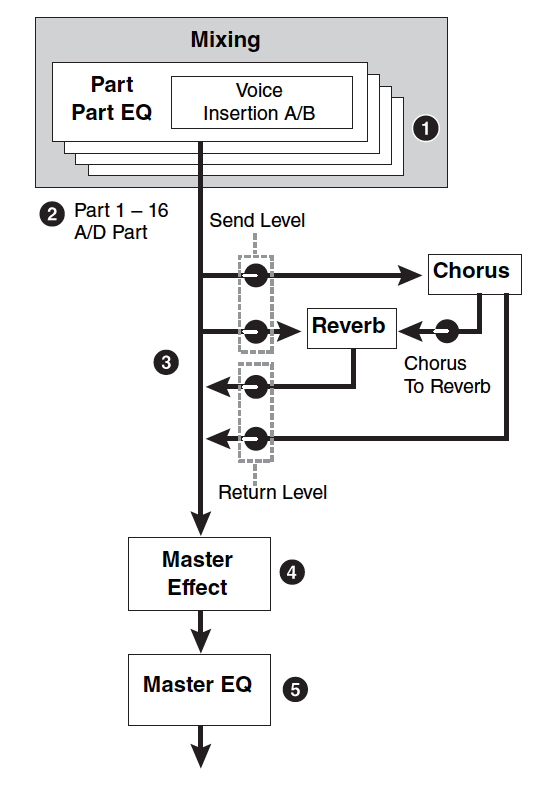

“A picture is worth a thousand words”, some great mind said. Please refer to the MOXF Reference Manual pages 19-20 for the full graphic story on the Effects routing in the MOXF for VOICE mode, PERFORMANCE mode and for SONG/PATTERN MIXING modes. This makes it very clear where the Effects blocks are and when they are available via a simplified flow chart. We will try and make clear how this impacts you using the instrument to its fullest.

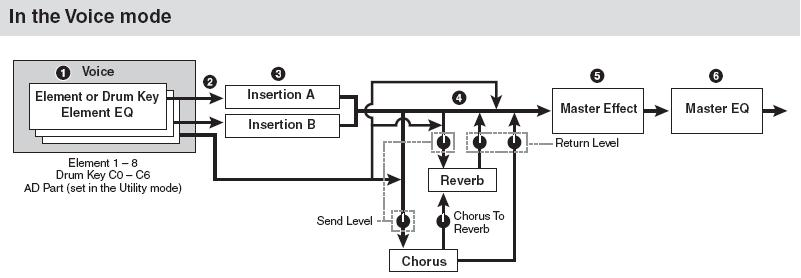

In VOICE mode:

There are up to 8 Elements in a normal MOXF Voice. Each can be individually assigned to the INSERTION EFFECT block, which is a dual block (Insertion A and Insertion B). These two units can be routed in series or in parallel (see the routing as A-to-B, B-to-A or parallel). Each Element can be routed to “ins A” to “ins B” or to neither (“thru“). The two SYSTEM EFFECTS (Reverb and Chorus) each have their own send levels for the entire Voice (that is, all Elements together). The output of the Chorus can be routed to the Reverb. And there is an independent RETURN level and PAN position control for each System effect – mixing the signal back into the main flow; and a PAN position control. Next, the entire signal then goes on through the MASTER EFFECT, the MASTER EQ (a 5-band EQ) then on to the main stereo output.

1) Element EQ applied to each Element (for a Normal Voice) and each Key (for a Drum Voice)

1) Element EQ applied to each Element (for a Normal Voice) and each Key (for a Drum Voice)

2) Common EQ applied to all Elements and Keys

3) Selection of which Insertion Effect, A orB, is applied to each Element/Key

4) Insertion Effect A/B related parameters

5) Reverb and Chorus related parameters – These are referred to as the “SYSTEM EFFECTS”

6) Master Effect related parameters

7) Master EQ related parameters

An important thing to understand about these VOICE mode effects is that the Insertion Effect assignment can be recalled for up to 8 of the 16 Parts when the VOICE is used in a multi-timbral setup in SONG/PATTERN mode and all Parts of a PERFORMANCE even the A/D INPUT can recall their two Insertion Effects …more on this point in a minute. There is one A/D INPUT PART setup for all of Voice mode – your A/D Part can be routed to its own dual Insertion Effects.

What this means in simple terms is: A MOXF Voice can be very complex in terms of how it deals with Effects. Each multi-sample component within a Voice can be routed to one or the other or both or neither of the INSERTION processors. These are the effects that you can control in real time – by assigning important parameters to physical controllers like your Mod Wheel, Foot Pedals, Assignable Knobs or Assignable Function buttons, etc. The INSERTION Effect often gives the Voice its personality. The Rotary Speaker for a B3 sound, the soundboard Damper Resonance for the piano, and the Overdrive Distortion for the electric guitar are all examples of effects that give a sound its identity/personality.

The SYSTEM EFFECTS (Chorus and Reverb) are shared effects – they are shared by all the Elements together. They provide the outer environment for the sound. That is, the room acoustics. Reverb is the size and shape of the room in which the instrument is played. The Chorus processor can be thought of as a “time delay” effect. Its principal function is from extremely short time delays (Flanging and Chorusing) to long multiple repeat delays (like Echoes).

When the VOICE is placed in an ensemble (either a PERFORMANCE or a SONG/PATTERN MIXING program) it does not take along the SYSTEM EFFECTS. And therefore the Voice will not always sound the same as it does alone in VOICE mode. This is because the SYSTEM EFFECTS may, in fact, be very different. The SYSTEM Effects are shared by all of the PARTS. This makes sense because, remember, System Effects are the OUTER environment. It would be analogous to the musicians all being in the same room acoustics. You have an individual Send amount for each Part allowing you to place each instrument near or far from the listener.

We have mentioned this before, and it’s worth repeating in this article, the VOICE is the basic fundamental playable entity in the MOXF. The other modes we will discuss (Performance, Song and Pattern) use VOICES but place them in an entity called a PART. Knowing what a PART is very important because what it allows us to do is to address the same VOICE differently when we combine it with other Voices. Say you want to use the FULL CONCERT GRAND combined with a Bass sound to create a split, at middle “C”, for example – you would do this by placing the Full Concert Grand in PART 1 of a Performance and your favorite Bass in PART 2. Part parameters would allow you to play the piano above a particular note and the Bass below a particular note. Those would be PART parameters. You do not have to destructively edit the original VOICES to use them in combination. PART parameters allow the same Voice to be treated differently in each ensemble. It is not necessary to create a separate version of the FULL CONCERT GRAND that only plays above the “C3” split point.

Later you decide to use the Full Concert Grand piano in combination with a String sound to create a layered sound. Again, you would place the Full Concert Grand piano in PART 1 of a Performance, and your favorite String sound in PART 2 to create a layered sound without destructively editing the original Voice data.

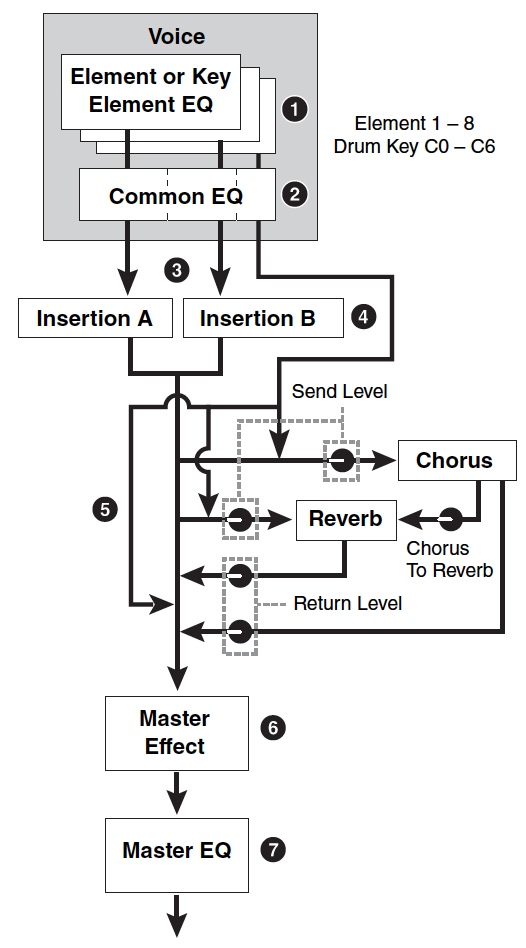

In PERFORM mode:

There can be up to 4 Voices plus an A/D INPUT in a Performance. The DUAL INSERTION EFFECTS are available for all Parts of the Performance. That is, Voices in a Performance can recall their original Dual Insertion Effect routing and control while in a Performance. What actually happens is you are activating the Dual Insertion effects that are programmed in at Voice level. Insertion Effects are applied at the VOICE Edit level.

What this means: An organ sound that has a Rotary Speaker and Amp Simulator effect back in Voice mode will automatically recall these (personality) effects when you place it in a PART of a PERFORMANCE and activate the INSERT FX SWITCH. The guitar sound that has an Overdrive Distortion and Wah-Wah effect back in Voice mode will automatically recall these effects when you place it in a PART of a PERFORMANCE and you activate the INSERT FX SWITCH. The Full Concert Grand piano will automatically bring along its Damper Resonance – because INSERTION EFFECTS can be considered a part of the VOICE. Of course, any assigned controllers are also automatically recalled, as well.

Each Voice in a Performance is called a ‘PART’. And each Part has an individual send level to the System Effects so that you can control how much is applied individually. There is a return level from each System effects. The total signal is delivered to the Master EFFECT, then to the Master EQ and then on to the stereo outputs.

1) Part EQ applied to each Part – each Part features a 3-Band EQ

2) Selection of the Parts to which the Insertion Effect is applied

3) Reverb and Chorus related parameters – these are referred to as the “System Effects”

4) Master Effect related parameters

5) Master EQ related parameters

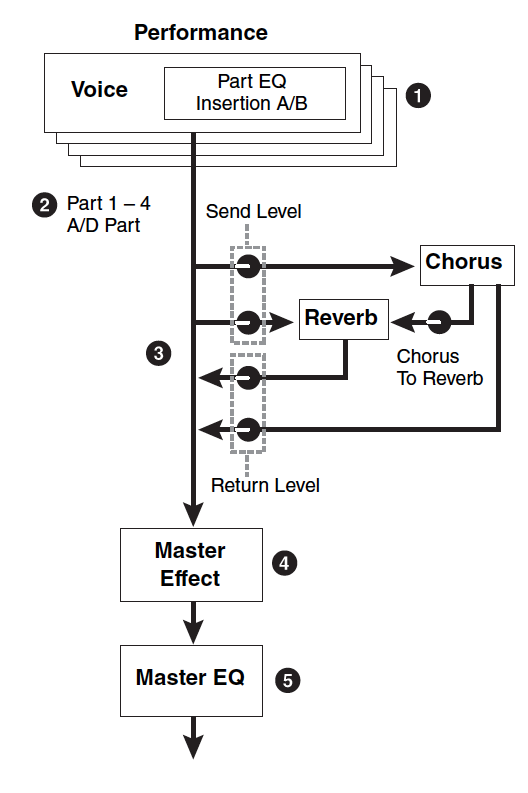

In SONG/PATTERN MIXING mode:

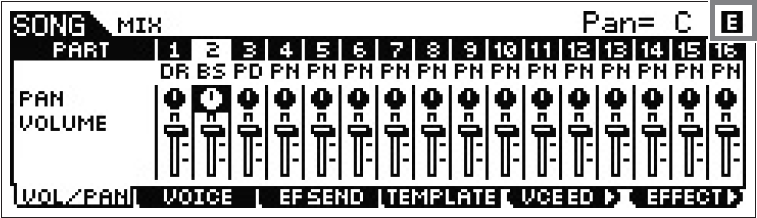

The Tone Generator block can have up to 16 Parts total. The DUAL INSERTION EFFECT can be activated on any 8 Parts from the internal MOXF (1-16). Each Part will have an individual Send to the System Effects (Reverb and Chorus). And finally, all signal goes through the Master Effect, the Master EQ and then on to the main stereo outputs.

1) Part EQ applied to each Part

2) Selection of the Parts to which the Insertion Effect is applied

3) Reverb and Chorus related parameters

4) Master Effect related parameters

5) Master EQ related parameters

Again you do not see what Voice Insertion Effects or what A/D Insertion Effects are active as you would have to see the Voice parameters to know… Insertion Effects are a part of the individual Voice programming.

Background

The algorithms (a fancy word for ‘recipe’ or specific arrangement) in the MOXF Effects are deep. Please refer to the DATA LIST booklet to see the individual parameters and effect types. On page 98-105 of the DATA LIST you will see a list of the different Effect Categories and Effect Names. It will list the parameters available in a convenient form to see them all and the ranges of control. This is worth a look. The TABLE Number heading is for those that need to know the exact value of each setting – refer to the charts on pages 108-115 for exact values for each parameter setting. Basically settings are made to taste (by ear). However, knowing what is subjective and what is objective is what separates a bogus mix from a brilliant mix.

So much of working with sound is subjective (meaning it is up to you) but some of it is very objective (meaning there actually is a right and wrong). It’s true. Knowing the difference between these two concepts is the key to greatness in the audio business. For example, when routing signal to an effect, do you return more than you send or send more than you return? Gain staging is the objective part of audio. Making sure that you work on the side of SIGNAL when dealing with the SIGNAL-to-NOISE ratio. The rule of thumb: Send up to the limit of clean audio and return just enough to taste.

If you are sending signal to an effect processor that you have configured as an EQ, how much signal do you send? Again this is not subjective, there is a right and wrong. Send all the signal through the EQ. If you were to return dry signal from certain routing scenarios you can cause phase cancellation – a situation where you will be adversely affecting the signals integrity. Knowing what you are doing with effects can mean confident utilization with stunning results. Just experimenting willy-nilly can lead to bogus results. Of course, you could eventually wind up with something useable but the old saying: “Knowledge is power” does apply here. In most instances the MOXF will not let you get into too much trouble – sometimes you are prevented from controlling certain things because it would be illogical or lead to bogus results …those decisions are made by the designers. For example, you will see where a subjective return is allowable a DRY/WET balance so that you can mix your amount of effect return, but from a device like an EQ there is no balance control, because the design will not let you make that “mistake”. This is a good thing.

As you will learn, not all parameters are available for real time control – again, a design decision is made to prevent unfortunate illogical assignments that would cause sonic problems.

The Processors: System – Insertion – Master

The Effect processors are divided into SYSTEM Effects (Reverb and Chorus processors); INSERTION EFFECTS (applied within the Voice architecture); MASTER Effects (applied overall just before the output).

The REVERB processor has 9 main algorithms available. When working with a reverb algorithm you can select by size environment: REV-X HALL, R3 HALL, SPX HALL, REV-X ROOM, R3 ROOM, SPX ROOM, R3 PLATE, SPX STAGE, and SPACE SIMULATOR. Then from there you can tweak it to match your specific needs.

Yamaha was the first company to introduce DSP effects that were based on the actual dimensions of the great concert halls of the world. A “HALL” is typically a large concert environment. The REV-X is the most recent development in a long line of Yamaha reverberation chamber algorithms and is the same effect found in the SPX2000 processor and in the high-end digital touring consoles from Yamaha. The Pro R3 was one of the first high-resolution digital studio reverbs and enjoys a stellar reputation in the field. The Yamaha SPX introduced the project studio digital reverb back in the 1980’s.

“ROOMS” have a definite size factor to the space. A “STAGE” is usually a loud reverberant environment. A “PLATE” is a brilliant emulation of the old 10-foot boxes that used to contain these reverb chambers that used a transducer (driver) at one end and second transducer (microphone) at the other…in between was a large aluminum plate. You sent signal from the mixing board’s aux sends and returned up to a maximum of 5 seconds of cool reverb. This was the standard for drums and percussion “back in the day”. The SPACE SIMULATOR will help you design your own environment and can teach you about how the other presets where made. It allows you set width-height-depth of the walls and the ‘wall vary’ lets you set the reflective texture of the surface from rug to steel. A rug absorbs sound, while the steel would be highly reflective. Under the SPACE SIMULATOR you will find several presets that will give you an idea of just what type of spaces you can simulate: Tunnel, Basement, Canyon, White Room, Live Room, and 3 Walls…

When you are thinking about these you must imagine how each will sound and why. A tunnel, for example, is long and narrow with reverberant surface walls; while a basement has a low ceiling and probably not much reflection of sound. A canyon you can picture has no ceiling so it is a wide-open space with a long reflection and bounce back. The “white room” is a starting point – you configure the space – but this preset is simply a neutral start…

Also important in working with reverb is an understanding of how it works in the real world. In most listening situations you are hearing a certain amount of signal, directly from the sound source, while the rest of the signal bounces off the environment you are standing in. If, for example, you are 30 feet from the stage you will hear a portion of the sound direct from the stage but most of it will bounce off of the walls, floor and ceiling to arrive at your position. Because we often record and/or amplify musical signal with a technique called “close-miking”, reverb became a necessary evil (if you will). Close-miking allows us to isolate a particular sound from others in the environment but there is a trade off…we lose that sense of distance and environment. To regain some of the distancing we use artificial reverb to do the trick. Recognize that when you put a different amount of reverb on the snare than you do on the flute this does not occur in nature. All the musicians in the same room would naturally have the same reverberant environment with very subtle differences due to positioning in the room. This gets back to the subjective part of the audio business. SO WHAT? You can use effects to taste. There is no rule that says everyone has to have good taste nor do you have to exercise it.

An important parameter in all the reverbs is the INITIAL DELAY this is the time before the reverb receives the signal and can help position the listener near/far from the instrument source. The initial delay in any acoustic environment is the time it takes before the signal reaches a significant boundary. In a large hall it could be several hundred milliseconds before signal bounces off the back wall.

The HPF and LPF are there to help you shape the reverb signal itself. There is a rule of thumb here: low frequencies reverberate less than high frequencies. Low frequencies tend to hit a surface like a wall and spread out while high frequencies hit a wall and bounce back into the room. This is why when you are sitting next door to the party you only hear the bass through the wall – all the high frequency content ‘reverberates’ and stays in the source room. So use the HPF (high pass filter) to allow the highs to pass through to the reverb and block the lows from reverberating. Reverb on bass just adds MUD. MUD is not a subjective term but if it is what you want go for it (but yuck, it is mud). Low frequencies don’t bounce back they tend to hug the walls and spread out. If you want cutting, punchy bass leave the bass “dry” (without reverb).

The MOXF Reverb processor features a brand new effect algorithm set based on the heralded Yamaha “Rev-X” technology. “REV-X” is a whole new generation of Yamaha Reverb with the richest reverberation tone and smoothest decay. There are “Hall”, “Room” and “Plate” algorithms. Newly introduced parameters like ROOM SIZE and DECAY envelope also bring much higher definition and finer nuance. The number of reflective impulses determines reverb quality it uses… the higher the number the more definition and the finer the quality of sound. It is processor intensive – these are very short reflections but lots of them to make the sound smooth.

The CHORUS processor features short time period delays from phasing, flanging, to chorusing and on out to multiple repeats and echoes. When we say “short” here we are talking much larger than the distance between reverb reflections because these can be heard as separate events. You even get additional Reverb algorithms for maximum flexibility when mixing. There are also tempo control delays that can be synchronized to the BPM of the music.

A Flanger is a time delay effect. If two identical signals arrive at your ear-brain, you will not be able to perceive them as two separate signals until one is delayed slightly. Imagine 2 turntables in perfect synchronization playing the same record at exactly the same speed. You would perceive the second one as just making the first signal louder until you delayed one of them a bit. If one slips 1ms behind the other you will perceive what we call flanging. The actual name comes from two 2-track reel-to-reel tape decks playing the same material. This was used as a real time effect, “back in the day”. You would have 2 identical 2-track decks running in sync (no, there were no protocols to sync them – you pressed the buttons at the same time!!!) The engineer would slow one down by placing his thumb momentarily on the flange (reel holder). The resulting swirling sound is called flanging. And there were no settings – it was all done by ear.

Any delay between exact sync and 4ms is considered flanging. Delays of 4ms-20ms are considered chorusing and somewhere beyond 30ms the ear-brain starts to perceive two separate events, called doubling or echo. Among the ‘time-delay’ algorithms in the Chorus processor you will find: Cross Delay, Tempo Cross Delay, Cross Delay Mono, Tempo Delay Stereo, Delay L/R, Delay L/C/R, Delay L/R Stereo, G Chorus, 2 Modulator, SPX Chorus, Ensemble Detune, Symphonic, VCM Flanger, Classic Flanger, Tempo Flanger, VCM Phaser Mono, VCM Phaser Stereo, Tempo Phaser, Early Reflection; additionally you will find three SPX Reverbs available in the Chorus processor (very useful when you want to set a lead or section of instruments apart from the rest of your mix), a Hall, Room and Stage reverb.

Each of these main algorithms has their own “Presets”. A Preset simply is a starting point. Remember, only you can know what is working for your particular composition. The Presets are provided and they are meant to be tweaked by you. They are “starting points”.

The INSERTION EFFECT is made up of two identical units (INSERTION A and INSERTION B). The 53 effect types and scores of preset can be the subjects of intense study. We will try and introduce you to some of the more unusual and unique ones in this article. Many of the recipes (algorithms) are repeated in the Insertion Effects simply to allow you more options when processing your mixes. In addition to all the reverbs, delays, echoes, cross delays, tempo delays, etc., you get some that are available nowhere else. Insertion Effects can be considered a part of the Voice itself, and can be assigned real time controllers so that you can manipulate them while performing.

The Yamaha VCM (Virtual Circuitry Modeling) Effects are revolutionary in that they are recreations constructed by modeling the circuit components (transistors, capacitors, resistors) of the classic gear they emulate. The designer then could reconstruct the products by creating virtual circuit boards. The VCM Flanger is a simulation of the classic vintage flanger devices. The VCM Phasers faithfully reproduce the response of the old mono and stereo guitar stomp box of the ’70’s.

Among the innovative effects from the Yamaha Samplers A4000/5000 are the Lo-Fi, Noisy, Digital Turntable, Auto Synth, Tech Modulation, Isolator, Slice, Talking Modulator, Ring Modulator, Dynamic Ring Modulator and Dynamic Filter.

There is a Multi-band Compressor algorithm that is great for fixing and punching up specific frequency ranges. Multi-band compressors are used to finalize mixes and bring out (punching up) specific frequency bands without raising overall gain. These are ideal when importing a stereo sample audio clip or when you are resampling within the MOXF.

The Digital Turntable algorithm adds “vinyl record surface noise” to your mix. You can program the tone of the noise, the frequency and randomness of the clicks and pops, and you can even program how much dust on the stylus!!!

Slice is also the name of one of the effect algorithms in addition to being a sample edit process. This Slice effect can divide the audio into musical timed packets that it can pan left and right in tempo. You can select a quarter note, eighth note or sixteenth note slice and there are 5 different pan envelopes and some 10 different pan types.

The innovative CONTROL DELAY effect is a digital version of the old style tape delay (Echoplex) where you can create wild repeating effects. When using the Control Type = Scratch you can assign a controller to create insane echo effects.

Why is it called “Insertion Effect” and what is the difference between it and a “System Effect”?

On an audio console you have a series of channels. Channels carry input or returns from a multi-track (we refer to them as Input Channels or Track Channels depending on their role). Each channel has an on/off button, EQ, a fader, and a set of auxiliary sends. These ‘aux’ sends allow each channel to send a portion of the signal on what is called a bus (a group of wires carrying like signal). That bus can then be connected to an offsite effect processor in a rack. The return comes back to the board and is mixed to the stereo signal. That scenario is an example of what happens in MOXF with the SYSTEM EFFECTS. That is, when you are in a Song or Pattern and on the MIXING screens, the REVERB, and the CHORUS Effects are arranged so that access is just like the auxiliary sends of a console – each channel (Part) has an individual send amount to the system effects. There is a composite return signal that is mixed to the stereo output.

An Insertion Effect on an audio console is usually accessed via ‘patch points’ (interruption points in the channel’s signal flow) that allow you to reroute all of the channel’s signal via a patch bay through the desired effect or device. You are, literally, inserting a processor on that specific channel alone. This is how the INSERTION EFFECT block works on the MOXF.

Examples: Typically, when a reverb effect is setup, just a portion of each sound is sent to it. This is the perfect example of what a System effect is about. However, things like rotary speaker (organ) or amp simulator (guitar) are effects that you might want to isolate on a specific channel. Therefore these type effects are usually accessed as an Insertion Effect. One key advantage of the Insertion Effect is that it can be controlled in real time, during the playing performance. Since the Insertion Effects are programmed at the VOICE level you can use the Control Sets (there are 6) to route your physical controllers to manipulate the parameters of the Insertion effect in real time. You can change the speed of the rotary speaker, or you can manipulate the Guitar Amp simulation setting while performing the guitar sound. This type of control is beyond just the send level (you are given access to System Effect send level only from the Voice mode Controller assignment). In the real world, the size of the room does not change (hopefully) so System effects like reverb are pretty much “set it/forget it”. However, changing the speed of the rotary speaker effect is something that you may want to perform during the song.

Just how are you able to control certain parameters in an Insertion Effect? …via MIDI commands, of course. In the hierarchy of modes in the MOXF VOICE mode is the most important when it comes to programming. This is where Yamaha spent hours and hours developing the sounds you play. The programmer’s assembled the multi-samples into waveforms, and combined the waveforms into the Voice and worked with the envelopes, the response to velocity, the pitch, the tuning, the filters and so on. Each sample in the MOXF has its own EQ, the meticulous programming goes on for months at a time. Of course part of the arsenal available to the programmers were the Effects.

How are the EFFECTS routed?

Navigate to the MOXF Effect connection screen.

• Press [EDIT]

• Press [COMMON]

• Press [F6] EFFECT

• Press [SF1] CONNECT

For PERFORMANCE and/or SONG/PATTERN mode

• Press [MIXING]

• Press [EDIT]

• Press [COMMON]

• Press [F6] EFFECT

• Press [SF1] CONNECT

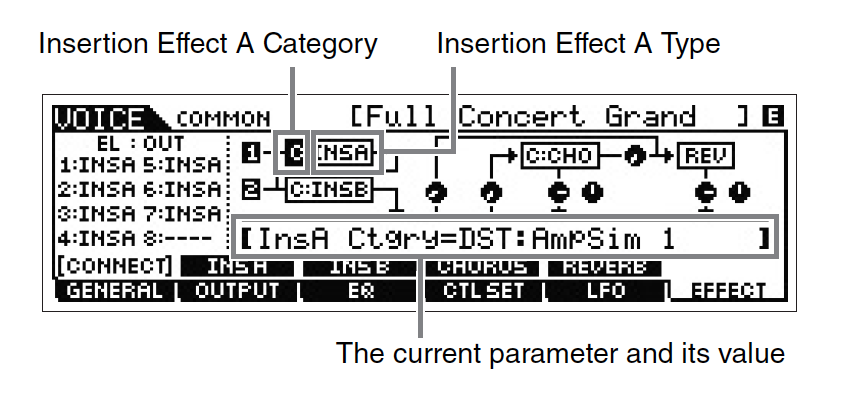

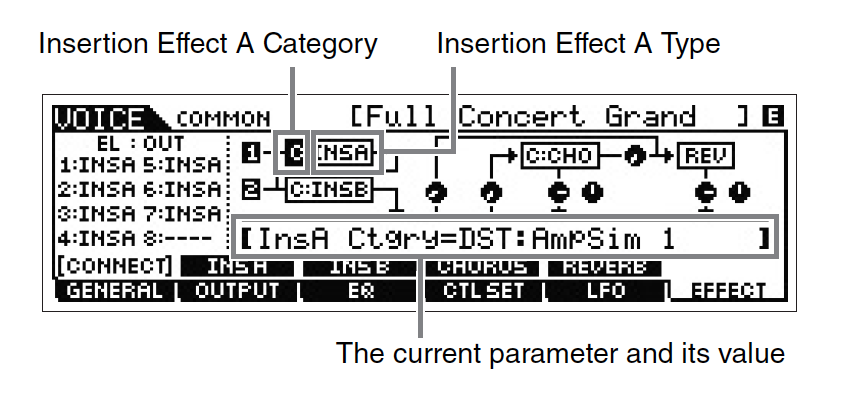

This screen shows you an overview of the connections and the signal flow (follow the routing left-to-right). It pays to study the diagrams to get a clear understanding of how signal travels. Each Element is routed to “INS A”, “INS B” or “thru”. The INSERTS are routed in parallel, A>B, or B>A; You have sends to the Reverb, to the Chorus, between the Chorus and Reverb. There are Return Levels and Pan position.

VOICE Mode:

Each normal Voice can have as many as eight Elements each with its own Element EQ. A drum Voice has a different Element on each of its 73 keys and each has its own EQ. The entire Voice (all Elements together) then go through the Voice’s COMMON EQ.

While in VOICE mode each Voice can use a pair of Insertion Effects processors [A] and [B]. Each of the eight Elements of a normal Voice can be routed to one, the other, both or neither of the Insertion processors. Real time control over selected parameters is possible in the Voice’s CONTROL SET. Each drum Key can be routed to one or the other or neither of the Insertion processors.

Next is the SEND/RETURN setup where a portion of the total Voice signal can be routed to the SYSTEM EFFECT processors (Reverb and Chorus). There is a SEND amount routing the output of the Chorus processor to the input of the Reverb, if desired.

PERFORMANCE Mode:

A PERFORMANCE is a combination of as many as four internal synth PARTS plus an A/D INPUT PART. In other words, there are storable parameters for the A/D INPUT for each individual PERFORMANCE. The important thing to understand here is how the Effect processors are allocated in this mode. All of the PARTS can use their own dual Insertion Effect routing. All five of the PARTS have a separate SEND amount control to the Chorus processor, and to the Reverb processor. Then the entire signal goes through the MASTER EFFECT, the MASTER EQ before going to the output.

Each Part will have its own control for the amount of signal sent to the System Effect on the Part Edit level. While in Edit, you can select the PART to edit by touching the numbered button – corresponding to Part.

Notice that between the Chorus processor and the Reverb processor you have a level Send control knob: Chorus-to-Reverb Send. This can be used to create a situation where the System effects are used in series (one after the other) rather than in parallel (side by side). An example of how this can make a difference is when you select a DELAY as the effect for the Chorus and a HALL for the Reverb…when parallel routing is selected, you could send a signal independently to the delay and to the reverb. Only the initial note will have reverb, each repeat would be dry. By routing “0” send to the Reverb, but send the signal through the Chorus first, then through the Chorus-to-Reverb send, on to the reverb, you will now have a signal where each repeat of the Delay will have reverb.

• Press [SF2] INS SWITCH

You can select any PART.

As you move your cursor to the right in the [SF1] CONNECT screen you can move the cursor highlight into the CHORUS or REVERB effect and select from among the different algorithms. A convenient Category function (“c”) lets you quickly sort through the different effect types (“DST”, for example, is the Distortion category, and “AmpSim 1” Amplifier Simulator 1 is the program type).

As you move your cursor to the right in the [SF1] CONNECT screen you can move the cursor highlight into the CHORUS or REVERB effect and select from among the different algorithms. A convenient Category function (“c”) lets you quickly sort through the different effect types (“DST”, for example, is the Distortion category, and “AmpSim 1” Amplifier Simulator 1 is the program type).

You can drop into edit any of these four processors via the associated [SF] (Sub Function) button. For example, in the screen above [SF2] is INS A, [SF3] INS B, [SF4] is Chorus and [SF5] is Reverb.

One you enter EDIT of one of the processors, you may need to use the PAGE [<]/[>] buttons to view multiple screens. It varies according to the particular effect type in question.

You do not access the INSERTION EFFECTS via PART parameters so where do you edit the Insertion Effects?

The Insertion Effects are simply accessed from Voice mode. When you go to this same [CONNECT] screen in VOICE mode you will see [SF] buttons available to access the INSERTION EFFECT parameters. The Insertion Effects do not appear in the MIXING CONNECT screen because the Insertion Effects are part of the VOICE mode edit parameters. If you need to radically change an Insertion Effect from the original programming then you will need to create a USER Voice with your new Insertion Effect edits and STORE it as a USER VOICE. That USER VOICE can then be used in your PERFORMANCE.

What if I want to edit a Voice’s Insertion Effects while I’m working on a SONG or a PATTERN?

You have the ability to edit a Voice directly while still in a Song Mixing or Pattern Mixing program. Because it is so often required to make changes to a VOICE when used in a sequence, the MOXF provides a shortcut method to access full edit functions for a VOICE while you remain in the SONG MIXING or PATTERN MIXING mode. Here’s how this works:

Press [EXIT] to leave EDIT mode but press [MIXING] to view the MIX screen. The MOXF allows you to drop into full Voice Edit for any Voice while still in the MIXING mode. Press the [F5] VCE ED (Voice Edit) button to drop into edit.

Press [EXIT] to leave EDIT mode but press [MIXING] to view the MIX screen. The MOXF allows you to drop into full Voice Edit for any Voice while still in the MIXING mode. Press the [F5] VCE ED (Voice Edit) button to drop into edit.

This allows you to edit a Voice and its two Insertion Effects (provided the INS SWITCH is active for the PART) while you are using the sequencer so that edits can be done in the context of the music sequence. When you STORE this edited Voice it will automatically replace the Voice in your MIX in a special “MIX VOICE” bank, which is “local” to the current Pattern or Song. (If you are editing a DRUM KIT you will need to store this KIT to one of the 32 provided USER DRUM Bank locations).

What this means is the Mix Voice will automatically load when you load the Pattern or Song, even if you load just the individual Pattern or Song. Each Pattern Mix and Song Mix has 16 Mix Voice locations total. Due to complexity, Drum Voices cannot be stored in Mix Voice location.

In Voice Edit you have 6 Control Sets that allow you to customize how the available effect parameters are controlled. Choose your assigned MIDI controls wisely, they will be available when you go to Song or Pattern Mixing.

Master Effects

The Master Effects are “post” everything but the Master EQ. So they are applied to the overall System signal (stereo). These are 8 effect algorithms that you will also find in the Dual Insertion Effects. If you want to apply them to a single sound, you can create a Voice and find the algorithm within the 116 Dual Insertion Effects.

These selected types are:

• DELAY L,R STEREO

• COMP DISTORTION DELAY

• VCM COMPRESSOR 376

• MULTI BAND COMP

• LO-FI

• RING MODULATOR

• DYNAMIC FILTER

• ISOLATOR

• SLICE

These are typically “DJ”-type effects, for lack of a better term, because like a DJ would, they are applied to the entire recording. DJ’s are either playing back a record or CD that is a finished mix. So the effects that they add are always post, they cannot put a Dynamic Filter on just the snare drum, if you get my meaning, so “DJ-style effects”. These Effects are applied to the entire SYSTEM signal. Don’t be afraid to use your imagination with these Master Effects – some of them are quite radical. Things like putting a Delay on the final hit of the song so that it repeats and fades …or using a frequency Isolator to roll out all the bass for a section of a song, then bringing it back in for dynamic impact …or wacky panning effects with the Slice algorithm where you can pan signal left and right in tempo with the groove. Also on the more normal side, you are given a powerful Multi-band Compressor for pumping up the frequency bands of the final mix. Awesome tools… experiment!!!

Master EQ

Although not technically an effect (EQ is an essential utility for any mixer), the Master EQ is the last process the signal goes through prior to the main outputs.

In Voice mode, the Master EQ is setup and is global for the mode (applies to all Voices). While in Voice mode:

• Press [UTILITY]

• Press [F3] VOICE

• Press [SF2] MASTER EQ

Here you find the full 5-band parametric EQ. Parametric means you can select the Frequency, the Gain (increase/decrease) and the Q (or width) of the bands). Within each VOICE you will find a three band (adjustable Mid-Frequency) Equalizer available via the KNOB CONTROL FUNCTIONS for quick tweaks.

In PERFORMANCE mode or in Song/Pattern MIXING modes you can setup the Master EQ on a per program basis

• Press [EDIT]

• Press [COMMON EDIT]

• Press [F2] LEVEL/MEF

• Press [SF3] MASTER EQ

Conclusion and final thoughts

Signal flow is the most important thing to get a handle on when you are seeking a better understanding of audio. This is particularly true when it comes to affective effect processing. The MOXF uses professional mixing console routing as the basis for how signal flows through the synthesizer. A Voice or Part is like a musician playing an instrument. So imagine a guitar player with a wah-wah pedal, and a combo amp. These are like his Insertion effects… He inserts the guitar into the wah-wah pedal and then to his combo amp. Insertion Effects are controllable in real time by the player – and this is an essential part of performing. That is the guitar Voice in Voice mode.

Now take that player and his rig to a recording studio. This would be the Sequencer mode. When you activate the INSERTION SWITCH for the PART containing the Guitar Voice, it is like the player brought along his wah-wah pedal and combo amplifier from home. And they will be able to manipulate them in real time as they perform.

In the studio (SONG MIXING) mode they are plugged into the console, the guitar channel has two auxiliary sends. One connects to the studio’s reverberation chamber, the other send can be routed to some sort of delay/chorus/flanger/delay (as may be required by the session).

That is what you have here in the MOXF; Real time control over personal effects, and a mixing console’s Send/Return situation with the System Effects.

Now to continue with this analogy, if you route a signal to a direct out on a mixing console, you interrupt the signal in the patchbay… this takes that channel out of the main mix and allows you to route it, in isolation, to some other destination. This interruption removes that channel from the auxiliary sends (the ones feeding the Reverb and the Chorus processors), but you would be doing this interruption precisely because you are going to process the signal in isolation, separately.

When you take a PART of your MIXING program and route it to a direct (assignable) pair of outputs, it is removed from the main stereo mix, and it no longer is pooled with the others via the aux sends to the studio’s effects.

More on this type of advanced routing in our next article which will integrate USB audio recording into our discussion of Effects. Routing a channel to a direct output is done when you have something you want to do to it in isolation.

Keep Reading

© 2024 Yamaha Corporation of America and Yamaha Corporation. All rights reserved. Terms of Use | Privacy Policy | Contact Us