How's it going? Reddit directed me this way, so I hope someone can help. I've been building an FX chain in Propellerheads Reason for a neuro bass and I want to use a patch I've created on the MODX as my input into that FX chain. I'm using the MODX as my interface, so the routing goes:

MODX>Reason audio track> FXchain>MODX>Output

I have the audio track routed into the FX within reason, but it does nothing to the sound. All it does is play the unaltered patch as it would sound coming from the MODX. When I record audio from the board and run that through the chain though, all the effects work fine. It's really slowing down my work flow though having to record, decide I don't like it, adjust parameters on the patch, record etc.

I could try Setting up a midi module in Reason and playing the board from that input. Then, send the audio from there back into an audio track in reason. But, I don't think that'd make the difference here and anyone who works in Reason regularly will know just what a headache it is to output the External Midi Device modules to audio.

All help welcome, this has been bugging me for way longer than I'm alright with. Thanks in advance

Hi Stuart,

Welcome to YamahaSynth!

Your MODX has 10 Audio Outputs via USB. You need to tells us which of the Outputs you are Routing to the Audio Track.

MainL/R, USB1/2, USB3/4, USB 5/6, USB 7/8?

On your MODX

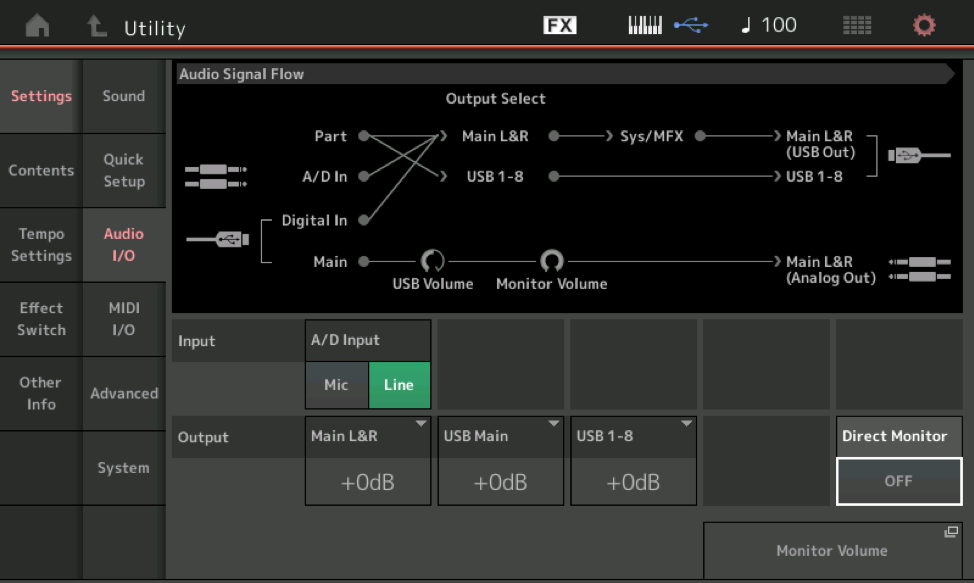

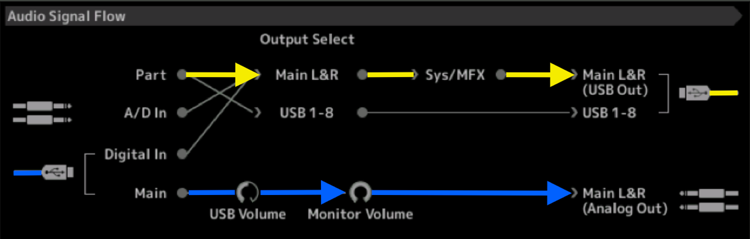

Press [UTILITY] > “Settings” > “Audio I/O” > set Direct Monitor = OFF

This will prevent the MODX from sending audio directly to the speakers.

This should solve your issue— IF IT DOES NOT THEN: you need to verify the rest of the Routing within your DAW.

WHY:

The MODX as audio interface had two pathways to deliver audio to your speakers (Analog Out). One goes directly there. The other goes via USB to your DAW, returns after processing, to the MODX via USB, then to the Analog Outputs.

Study the Audio Signal Flow diagram.

When you turn Direct Monitor ON and OFF notice the change in signal flow.

When On, both pathways are active

When Off, only the USB route, the one that goes through your DAW, is available.

If you are not hearing anything when set DIRECT MONITOR = OFF, the issue is within the DAW

Let us know.

Woah, I'm actually on with the Bad Mister. What's up bro? You're an absolute Legend. Yeah, It appears to be a problem with my routing in the DAW. While I've got you here, I just wanted to ask you something. Are there any resources you can recommend for in-depth learning of the MODX? I love this synth man, It's complexity and flexibility were the main reason I bought the thing. But, the Reference Manual is so dense. It's just not practical to have as PDF to look through and I'd prefer not to have to get the thing printed and bound, although right now that's looking like my best option. So far, most of everything I know about it has come from screen grabs of this forum, or just guys on Youtube explaining things about it and it's honestly very hit and miss. This site, for whatever reason doesn't load up right for me when I'm using a VPN, hence the late reply. Thanks again for the speedy reply, mad respect for the job you do educating the masses here.

Are there any resources you can recommend for in-depth learning of the MODX?

This website (YamahaSynth.com) Articles, Tech Talks, Videos, Forum...

The Music Production Guides are a very valuable resource:

LINK: http://www.musicproductionguide.eu/mpghistory/history_en.htm#2020

Careful with random, well meaning folks, and their online videos... I’ve watched a few that were just not correct information. (Drives me crazy sometimes). For example, don’t have a favorite method, try to learn multiple ways to do things - then apply the best one for the task.

If you only learn to use Pattern record, for example, and never explore MIDI Song, you ultimately are doing yourself a disservice.

And vice versa.

But, the Reference Manual is so dense. It's just not practical to have as PDF to look through and I'd prefer not to have to get the thing printed and bound, although right now that's looking like my best option. So far, most of everything I know about it has come from screen grabs of this forum, or just guys on Youtube explaining things about it and it's honestly very hit and miss.

A couple of thoughts here on the Reference Manual: You are correct, it is dense and rightfully so... here are some tips on how to make the best use of the documentation.

You don’t have to print it out... it is actually more valuable as a pdf. Keep both the latest Reference Manual and the most recent Supplementary Manual (rather than reprinting the entire Reference Manual with each update - a Supplementary Manual is released with each major update.

Keep these nearby, either on a tablet or computer. For the cross-referencing and you can search a Key Word, these will be invaluable.

They are Reference Manuals - which means you should not read them like a novel. They do not help that much doing that. Use them to “look-up” items when necessary.

The Owner’s Manual should be read - it is the one that gives the theory of operation (it is printed).

The Reference and Supplementary define what each parameter does... and should be PDFs

If you wanted to learn about a car or an airplane’s dash board. The Reference Manual would dutifully define each control left to right, but not really help you learn to drive or fly.

The Owner’s Manual contains Quick Guides which will, step-by-step, take you through a routine... and is why it is printed... you should do each of these Quick Guides at some point, to get a feel for workflow (you don’t get that from the Reference Manual or Supplementary Manual - you simply get the definitions).

Bad Mister Tip: separate your learning sessions from your creative sessions. In other words, when trying to learn the Pattern Sequencer... don’t split your brain trying to both write a new song while learning the process of recording/overdubbing/editing. Rather concentrate on the process — this will be helpful because it will allow you to proceed with *learning*. You don’t learn to drive by getting behind the wheel of a car and driving cross country. You practice the processes first.... left turns, right turns, reverse, etc.

If you are recording a Pattern don’t interrupt learning the process with stopping to mull over the selection of each sound. Rather concentrate on the process. Pick three or four instruments in a Performance and just work on the process... drums, bass, keys, period.

Later when you are familiar with the process - then get into making “production decisions” — learn to separate *learning* from creating. Once you learn the routines, then you can apply them creatively, and even design your own unique workflow.

This is the biggest mistake I see when asked the best way to master any topic. This and *trying to work the way you always used to work*.

Try to be open enough to recognize that when something provides a new set of features, it may not fit neatly into how you *used to work*.

Learn to work the new item, then make the appropriate adjustments to *include* the new item into your world.

You see many folks struggling with MIDI Channel reassignment... (or the lack of it).... even though they never have worked with recording a multiple MIDI channel program, never having morphed between multiple Parts across multiple channels, never having even attempted to see what possibilities this brings to the creative table.

If you have always manipulated controllers with CC messages in your sequencer, and that is what you want to force the synth to do (and you can), you may never discover that the multiple PARTs of this engine allow for a different approach. When you layer 4 Parts on a single Channel (traditional old style) for example, and you wish to manipulate the Cutoff Frequency... cc74 right? All the filters do the same thing... when these four Parts are separately addressed by the Super Knob/AssignKnob control matrix, you can address each of the active filters, individually. A remarkable sonic difference.... you can be opening the filter a little on the bass, while opening it a lot on the Rhodes and closing it slightly on the cellos and contrabasses, while doing a very gradual change on the violas and violins, and so on...

If all you have is a hammer, every problem starts looking like a nail - and you may never discover a whole new world of control possibilities.

Most of all take your time and have fun. If you are not having FUN, you are doing it wrong...

Here’s why I feel separating learning from creating is important... if you totally mess up on things during a learning session, there is no anxiety about how you lost some genius composition... I record a blues (I got a million of them) I learn the button-pushing processes on a blues. When I am comfortable with the process, only then do I attempt to get more creative.

I mess up on the blues, no problem, no cry...

If I was trying to learn how it all works in the middle of a “work for hire” or a serious composing session, then the anxiety, frustration, and the “I want to throw the thing out the window-syndrome” will be in full effect. Avoid this scenario!

Hope that helps!

This site, for whatever reason doesn't load up right for me when I'm using a VPN, hence the late reply

Make sure you have your options set to accept cookies... in researching this, the majority of issues comes down to settings in the computer. In order to better serve musicians Yamaha gathers information (and as you may know, synthesizers is just one of instrument categories that the company participates in) — if you want to participate and post here on the Forum please (Log in to Yamaha, then into the YamahaSynth site).... and enable or allow cookies.

You absolute badass. Thanks a million, you actually don't know just how useful that answer is.

Thanks man

I have the same issue. Say you've made an instrumental/beat in the MODX with midi rec in DAW, and u record vocals in your DAW, your mastering on the DAW will not impact the instrumental from MODX. The only way is to export the audio from MODX into your DAW. This poses a problem, especially if you have more than 5 stereo USB outs/parts from the MODX. First, you have to route the first 5 usb stereo outputs and then the next (but when assigning the next, you have to deassign the previous tracks) - convoluted and cumbersome workflow if you ask me. God help you if you've mastered your song and you discover you need to change a section of a part in the MODX - that's a web of assigning and deassigning. Automation of parts in the MODX is another headache (I've found my way round it but it's a fidgety and long workflow for 2021 standard still - imagine trying automate a 3 part performance in a simple 10 track instrumental). The best thing would have been to have the MODX behave just like a VSTi -the midi tracks respond to both track FX and master fx in the DAW. That way, you can finish your project including mixing and mastering and export the final song from DAW. Makes life easier. Just makes me question my purchase as a producer: should I have invested half of the cost of MODX8 on good VSTis and bought a cheaper decent board for gigs? Was sold on the usability of the MODX in a DAW and really want it to be the centre of my production in terms of sounds but this workflow is a headache. I hope this is all due to a knowledge gap I have - if so, any help will be appreciated.

I hope this is all due to a knowledge gap I have - if so, any help will be appreciated.

It is! A huge knowledge gap… hopefully we can help fill in some of the major misconceptions you have.

First, I’m not clear how this is the same problem.

Next, you make a series of incorrect statements which if you believe are true is clouding your ability to find a suitable workflow.

One of the most common misconceptions about recording and working with any synthesizer and DAW is the belief or any belief that begins:

“The only way…” if there was a big 1200 Watt buzzer I could push to show just how WRONG that statement is… I’d be leaning on it very hard right now! lol…

The thing about music production, and anyone that has followed my instructions over the years has heard me say, “…there is no one-way to do most things.”

I’ve made a discovery that many users always record MIDI first. Or they always think they need to record MIDI first. Then upon talking with them we discover they really don’t know WHY they are doing that… it’s just something they have always done. If you are not going to event Edit, if your not going to do notation, if MIDI is not your finished production goal… WHY are you recording MIDI?

Point being made here is: analyze your goal… and decide if recording MIDI is going to benefit you. You want to be comfortable enough with your tools that the Parts you wish to event Edit do as MIDI, others record directly as Audio.

Redo in audio is handled by Punch In/Out (add that skill) or a complete ‘do over’.

The MODX/MONTAGE offer several things that cannot be captured as MIDI — This means in order to document them in your DAW requires you record Audio! If you always do the one MIDI Track at-a-time method of recording (because you always used to…) maybe it’s time to broaden your workflow.

The other supersized misconception is that everything needs to routed to its own Audio Track. Somebody please press that 1200 Watt buzzer… again! — this is simply NOT the case. In fact, unless you are going to process an instrument separately in your DAW, it likely does not need to be isolated… mileage can, of course, vary. But say, as you are currently thinking… that you take a separate stereo audio output for each MIDI Part…Right away there’s a waste problem.

For example, in all likelihood your Bass sound is mono (as in not stereo), why on earth would you assign this a Stereo bus to the DAW?

Record as stereo only those things that will benefit from being recorded in stereo. A B3 organ is typically sampled in mono, but the Rotating Speaker Effect requires stereo in order to ‘rotate’. The MODX has ten USB bus outputs (Stereo plus 8 assignable)… The MONTAGE has thirty-two USB bus outputs (Stereo plus 30 assignable). The assignable outputs can be configured as odd/even stereo pairs or individual mono buses, as you may require.

More often than taking each Part to its own Audio Track, I wind up rendering audio based on what the specific Project requires.

For example, on pop/rock and r&b projects, I often take separate audio bus outputs on the Kick, Snare, Hihats — in many such projects much of the ‘personality’ of the composition is tied up in the drum and rhythm section sounds. I might do one complete pass rendering the DRUM KIT sounds. Once rendered as audio, I take the MIDI tracks and drop them into a Muted Folder.

Things like sweetening, for example, may share a stereo bus (strings, pads, bells, etc., etc) I don’t need each separately, I combine them into one stereo feed. If I need to adjust the balance between them - I still can do that using MIDI automation*.

The amount of MIDI automation found in these Motion Control Synthesis Engines (MODX/MONTAGE) is massive and very often completely overlooked by those who insist on only working as they always used to (see my posts above).

I wrote an article, years ago, on “production decisions”… if the work becomes tedious, tiresome, or how did you put it… “convoluted and cumbersome” you should seriously think about hiring (and paying) someone else to do it for you. I got into audio engineering to add it to my toolkit as a musician… I can’t ever remember those feelings that it’s too much work. As a recording engineer, it forces you to get into someone else’s workflow… you cannot afford to let things get too complicated. If it’s your own music — that statement does not compute for me. Get ‘er done!

To take an individual audio output on each and every Part is not only NOT necessary, in most cases, but can absolutely lead, for some, to a convoluted and cumbersome workflow especially, when you need to undo something. So why are you even thinking about painting yourself into that corner?

A separate Audio Output on each Part means you are disassembling your MIDI mix, only to have to reconstruct a new Mix in audio. Starting to feel like perhaps you are working to hard? Many of the questions I answer here in the Forums deal with: “how come I can’t get enough record level from my tracks?” This is a person who is not appreciating the definition of Velocity Sensitivity — that the Velocity with which a note-on is played directly affects its total audio output level — oh, they understand this, they just don’t connect it with WHY their attempt to now render audio results in weak audio level. If you record your MIDI Track paying no attention to your Velocity, you may wind up with whimpy audio record levels. Once aware of this connection - suddenly you’ve enter a space where this stops happening to you.

Steinberg Invents VST

Seems a definition of VST and VSTi should be added to your world. Virtual Studio Technology comes in two main categories: Instruments and Effects. Steinberg innovated the concept of including *external* devices into the world of the Digital Audio Workstation (DAW) back in 1996. ‘External’ referred to any device outside of the DAW software itself. This included any software synthesizer plugins that ran on the computer’s CPU, and/or any hardware synthesizer that ran on its own power and CPU. It also included any software Effect processing plugins, as well as any hardware Effect processing. The advanced Delay Compensation in Cubase positions your data so this is possible.

If you want to setup your MODX as a VSTi recognize that this is simply a routing scenario. Setting up a MODX/MONTAGE as a VSTi allows you to open multiple MODX’s or multiple MONTAGE’s within the same session… you can FREEZE audio (a function that takes your MIDI Track, renders a temporary audio file, and then mutes the original MIDI Track). The audio (referred to as ‘virtual’ ) is returned via the same routing scheme any software synth would use… meaning not only Freeze is available, but you can use Export Audio Mixdown, you can process your MODX with plugin Effects… the ‘temporary’ or virtual audio is available for you to manipulate and try things out before committing to them.

When you have it just like you like, you can render a ‘real’ audio track

You keep your original MIDI recording in a muted folder. Now if you need to UNDO or change something, you simply return to your original MIDI data and go at it again.

You are using Reason… I cannot help you with running hardware VSTi’s there… but Cubase Pro allows this advanced routing setup and allows you capture your music in more flexible ways.

To say “the only way…” should be changed to a question, because almost whatever you say after that is just NOT going to be true.